In his paper "On Computable Numbers" Turing proposed a way of performing mechanical procedures on binary inputs to test whether mathematical functions could be computed from a set of simple rules. The Turing machine, as it came to be called, worked on a tape which it could move in both directions and on which it could write and erase binary symbols. He proved that there was a Universal Turing Machine, which, given a description of any Turing machine could then carry out the particular computation of that machine. Modern computers and their stored programmes implement Universal Machines. von Neumann, following Turing, began to think about how machines could build other machines. He discovered that he could define a Universal Construction Machine, which given the description of any Constructor, could construct it. This led him to define the logical structure of a self-reproducing machine. Remarkably, an implementation did not have to be invented, but exists in all biological systems. It requires a description of itself to build a copy of itself, but must copy the instructions and insert this copy into the new machine to complete the task. Thus Schrodinger was wrong when he wrote that the chromosome contained both the plan for the organism and the means to execute it; while they only contain a description of the means of execution.

This notion of computation is, in my opinion, the only valid approach to biological complexity and is opposed to many of the ideas underlying what has come to be called systems biology, which is very fashionable today. It will be shown that systems biology attempts to solve inverse problems - that is, obtain models of biological systems from observations of their behaviour - whereas, what I call computational biology, continues in the classical mode of discovering the machinery of the system and computing behaviour, solving a forward problem.

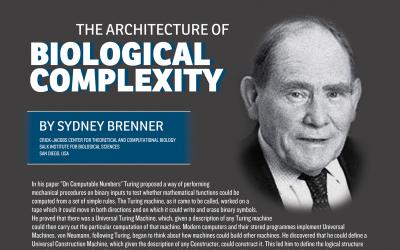

This lecture is part of the discussion meeting The Role of Theory in Biology with Prof. Sydney Brenner.